Why is Formal Methods Necessary?

Digital systems can fail in catastrophic ways leading to death or tremendous financial loss. Although their are many potential causes including physical failure, human error, and environmental factors, design errors are increasingly becoming the most serious culprit. The following recent events show the potential of design errors for disaster:

- The maiden flight of the Ariane 5 launcher (June 4 1996) ended in an

explosion

.

Total loss was over $850 million.

.

Total loss was over $850 million.

- Between June 1985 and January 1987, a computer-controlled radiation therapy machine, called the Therac-25, massively overdosed six people, killing two.

-

Replacement of

defective Pentium processors costs Intel Corp.

costed several hundreds of millions of dollars in 1995.

costed several hundreds of millions of dollars in 1995.

- The April 30, 1999 loss of a Titan I, which cost the taxpayers $1.23-billion, was due to incorrect software (incorrectly entered roll rate filter constant)

- December 1999 loss of the Mars Polar Lander was due to an incomplete software requirement. A landing leg jolt caused engine shutdown.

- Denver Airport's computerized baggage handling system delayed opening by 16 months. Airport cost was $3.2 billion over budget.

-

Patriot failure at Dharan

(software error put tracking off by 0.34 of a second)

(software error put tracking off by 0.34 of a second)

- NASA's Checkout Launch and Control System (CLCS) cancelled 9/2002 after spending over $300 million.

At Lockheed Martin in 1995, I found that the Lockheed Martin/Boeing F-22 will really be a computer with wings... If the software is dedtvered on time and on budget, and works as expected, the F-22 will be a success. If not, the F-22 will be in big trouble.

Thus software becomes the Achilles' heel of almost any aerospace defense project. To get it wrong means the whole project may go down the tubes.

On February 24, 1999, the PITAC issued its report, "Information Technology Research: Investing in Our Future," to President Clinton. They wrote:

Software research was judged by The President's Information Technology Advisory Committee to be the highest priority area for fundamental research. From the desktop computer to the phone system to the stock market, our economy and society have become increasingly reliant on software. This Committee concluded that not only is the demand for software exceeding our ability to produce it; the software that is produced today is fragile, unreliable, and difficult to design, test, maintain, and upgrade.

-

The PITAC stated that demand for software has grown far faster than our ability to produce it. Furthermore, the Nation needs software that is far more usable, reliable, and powerful than what is being produced today. The PITAC noted that the U.S. has become dangerously dependent on large software systems whose behavior is not well understood and that often fail in unpredicted ways. Therefore, increases in research on software should be given a high priority, including software for managing large amounts of information, for making computers easier to use, for making software easier to create and maintain, and for improving the ways humans interact with computers.

We are very good at building complex software systems that work 95% of the time. But we do not know how to build complex software systems that are ultra-reliably safe (i.e. P_f < 10-7/hour). Consequently, industries that build safety-critical systems have relied upon relatively simple system designs and minimized the amount of software that is safety-critical. They have also relied upon backups that are not software (e.g. a pilot that can take emergency action.) Nevertheless, even with this approach, the verification and certification of such systems has been enormously expensive. It is not unusual to hear that 60% or 70% of the cost of an avionics box is verification and certification cost.

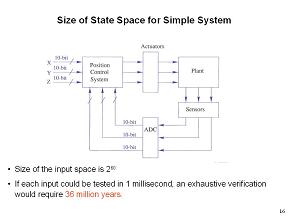

The traditional way of verifying software systems is through human inspection, simulation, and testing. Unfortunately these approaches provide no guarantees about the quality of the software after it has been verified in this manner. Human inspection is limited by the abilities of the reviewers and simulation and testing can only explore a minuscule fraction of the state space of any real software system:

-

The tag

![[*]](/images/exlink.gif) identifies links that are outside

the NASA domain

identifies links that are outside

the NASA domain